import numpy as np

import pandas as pd

import torch

from datasets import Dataset

from transformers import AutoModelForSequenceClassification, AutoTokenizer

from pathlib import Path

from datasets import ClassLabel

from transformers import TrainingArguments, Trainer

from sklearn.metrics import f1_score

from collections import Counter

# Some column string identifiers

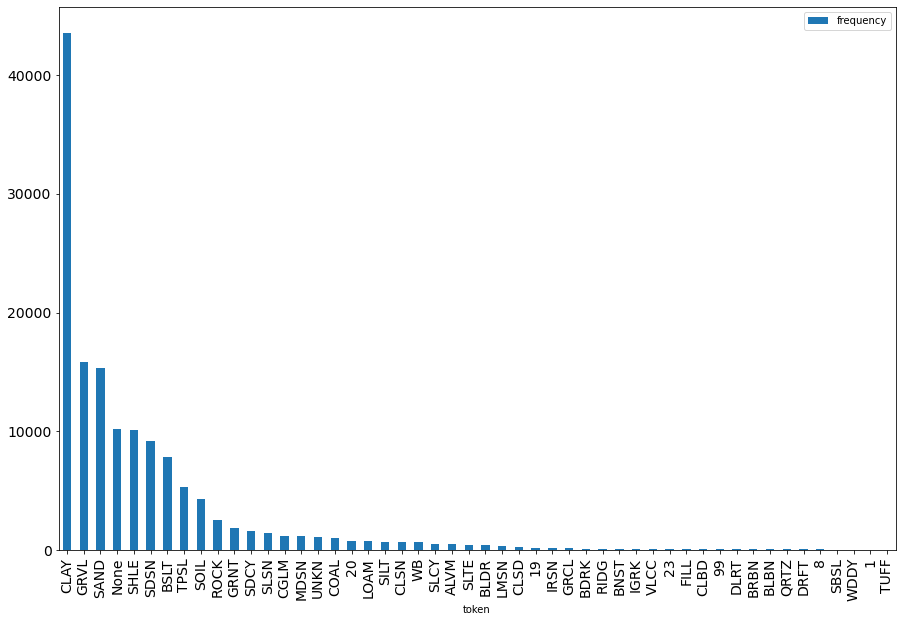

MAJOR_CODE = "MajorLithCode"

MAJOR_CODE_INT = "MajorLithoCodeInt" # We will create a numeric representation of labels, which is (I think?) required by HF.

MINOR_CODE = "MinorLithCode"

DESC = "Description"/home/abcdef/miniconda/envs/hf/lib/python3.9/site-packages/tqdm/auto.py:22: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm